Name:

Hrishitva Patel

Email:

About the author-

Hrishitva Patel is a researcher and a technology enthusiast. His fields of research interest include qualitative and quantitative research methods in the field of Management information systems and Computer science. He has a knack for chess and a passion for painting and reading books.

Abstract

Scalability is the capacity of IT systems, including networking, storage, databases, and applications, to continue performing effectively when their volume or size changes. It frequently refers to adjusting resources in order to satisfy either higher or reduced commercial demands. There are two different types of scalability, vertical and horizontal. Vertical scalability raises the capacity of hardware or software by adding resources to a physical system. Horizontal scalability unify several things together to function as a single logical unit.

A system that is scalable is capable of adapting quickly to changes in workloads and user demands. The capacity of a system to adapt to changes by adding or withdrawing resources to meet demand is known as scalability. The networks, applications, processes, and services that make up your complete system are built using the hardware, software, technology, and best practices that make up the architecture. In this literature review we will analyze how scalability works and analyze the different types of scalability patterns.

Introduction

One of the most commonly mentioned benefits of multiprocessor systems is scalability. Although the fundamental idea is obvious, there is no widely agreed-upon definition of scalability. Due to this, contemporary usage of the phrase increases commercial possibilities more than technical implications.

Scalability indicates a favorable comparison between a larger version of some parallel system and either a sequential version of that same system or a theoretical parallel machine, according to an intuitive understanding of the concept.

Scalability can be defined in different ways. It can be related just to the ability of controlling the workload as well as to the ability to increase the workload according to the cost-effective strategy to extend the system’s capacity (Weinstock, 2006).

Whenever the attention is given to the ability to control the workload, the system fades into the background, while if we consider scalability related to a specific system, we can understand how it actually operates differently, based exactly on the type of system used. For this reason in this literature review we will focus on how scalability operates in different information system, such as: distributed information, scalable enterprise applications, P2P systems, access control system servers and cloud audio scaling.

It is nearly always necessary to make trade-offs with other system characteristics when designing a system to be scalable (in any sense). It could be required to forgo certain levels of performance, usability, or another crucial aspect, or to pay a significant monetary price, in order to obtain ever-higher levels of operation.

When considering scalability, the tradeoff is something that constantly comes to mind. When demand (for example, the number of users) or system complexity (for example, the number of nodes) rises above a certain point, performance in a non-scalable system frequently suffers. By sacrificing some performance at the lower levels of utilization, it could be possible to reach higher levels of consumption without experiencing a pronounced loss of performance (Weinstock, 2006).

Scalability is not a granted process and can in fact fail causing the overloading or exhaustion of the resources. The reason behind the system failure are: exceeded available space, overloaded memory, excess of available network and filled internal table. In this research we will analyze scalability entirely by studying the possible outcomes of the systems examined.

Body

1.1 Distribued information system

As mentioned previously, in this literature review we will focus on scalability, not on a general level but rather by analazying they way it operates with different systems.

The first system that I would like to point my attention to is the distributed information system. The primary advantage of distributed systems is scalability. Increasing the number of servers in your pool of resources is known as horizontal scaling. Adding extra power (including CPU, RAM, storage, etc.) to your existing servers is known as vertical scaling.

The horizontal scaling of Cassandra and MongoDB is a good illustration. They facilitate horizontal scaling by introducing more machines. MySQL is a prime example of vertical scaling because it grows by moving from smaller to larger machines (Fawcett, 2020).

A distributed system is essentially a group of computers that collaborate to create a single computer for the end user. These distributed machines all work simultaneously and share a single state.

Similar to microservices, they can fail independently without harming the entire system. A network connects these autonomous, interdependent computers, enabling simple information sharing, communication, and exchange.

In contrast to traditional databases, which are maintained on a single machine, a user must be able to interface with any machine in a distributed system without realizing that it is only one machine. Today’s applications must take into account the homogeneous or heterogeneous nature of distributed databases, which are used by the majority of programs.

Each system shares a data model, database management system, and data model in a homogenous distributed database. In general, adding nodes makes these easier to manage. When using gateways to convert data between nodes, heterogeneous databases enable the use of several data models or various database management systems. The most common kinds of distributed computing systems are: distributed information systems, distributed pervasive systems and distributed computing systems.

There are both pros and cons in using a distributed system. Among the pros we need to remember scalability (which will occur only horizontally), modular growth, cost effective and parallelism. The latter refers to the design of multiple processor in dividing up a complex problem. However, as mentioned previously, distributed system can also provide some cons, such as: failure handling, concurrency and security issues.

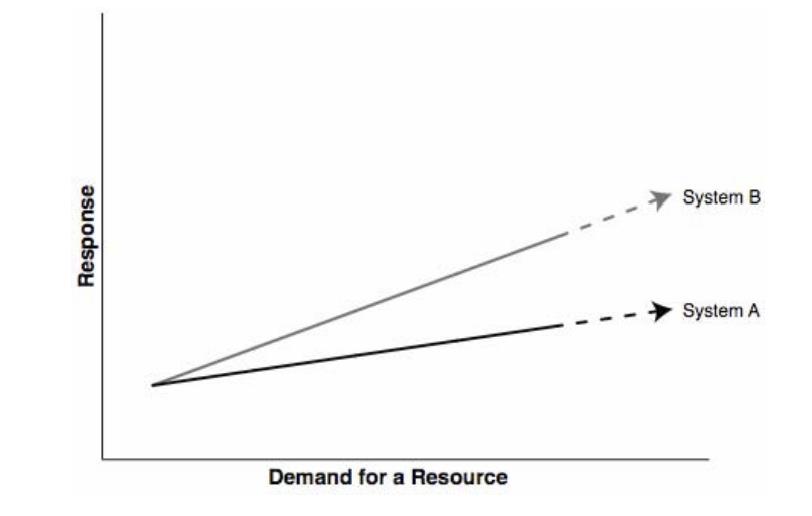

Failure handling consists in the inability of response of the demand for a resource. As shown in figure 1, the vector increases in a constant manner, which means scalability is happening in a correct way (Weinstock, Goodenough 2006).

Image from: On System Scalability, Weinstock, Goodenough 2006.

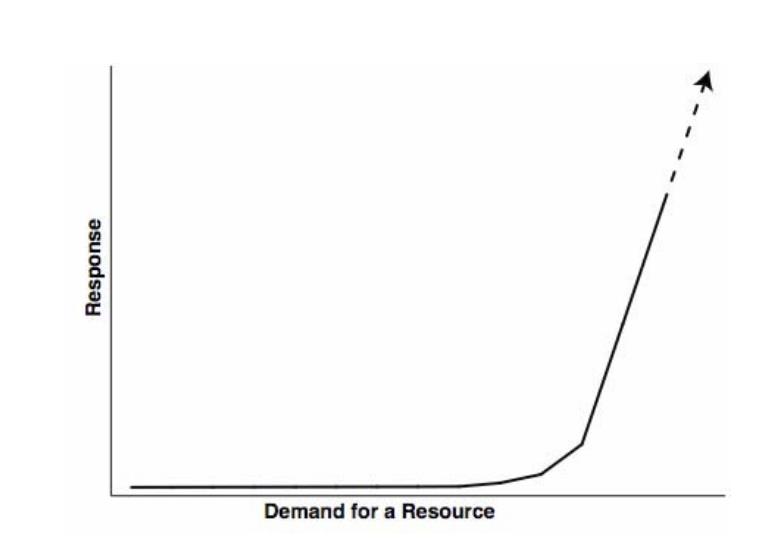

As visible in figure 2, the response in the demand for a resource was originally plane, and increased all of a sudden, generating to, what we refer as failure handling.

Image from: On System Scalability, Weinstock, Goodenough 2006.

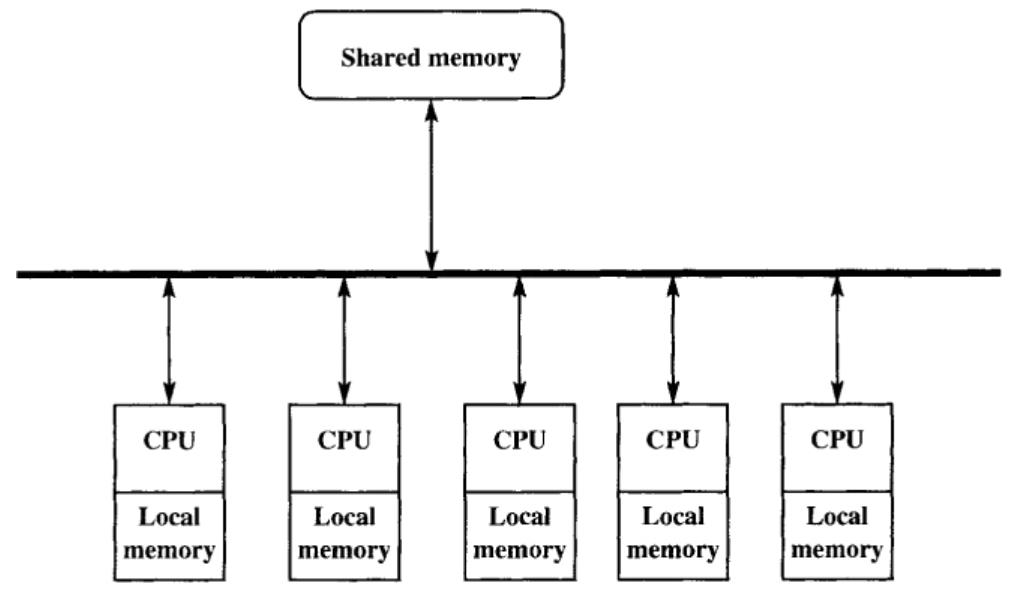

In a concurrent system, two or more activities (such as processes or programs) go forward concurrently in some way. Concurrency referes to the attempt of several clients to access a shared resource simultaneously.

Image from: Concurrent and Distributed Computing in Java, Vijay K. Garg, 2004

In distributed computer systems, there are greater hazards related to data sharing and security. Users must be able to access duplicated data across various sites safely, and the network must be secured.

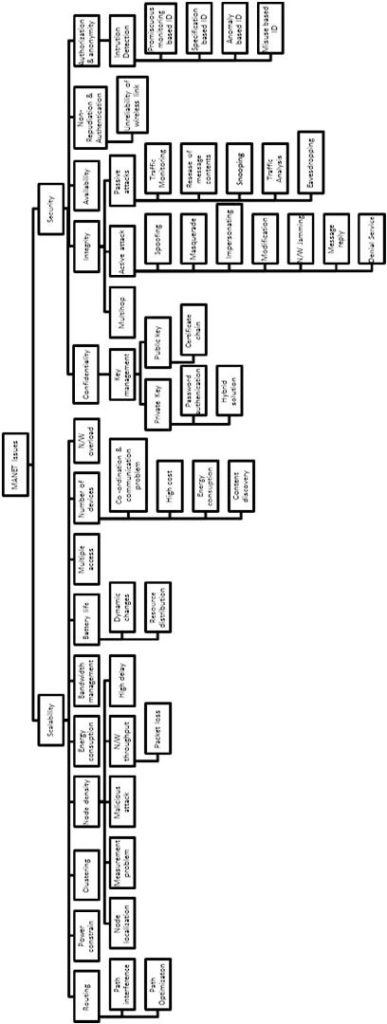

Image from: Review on MANET Based Communication for Search and Rescue Operations, 2017

Distributed systems are utilized in a wide range of applications, including multiplayer online games, sensor networks, and electronic financial systems. Distributed systems are used by many businesses to power content delivery network services. Transport technology like GPS, route finding systems, and traffic control systems all leverage distributed networks. Due to their base station, cellular networks are another illustration of distributed network systems.

1.2 Scalable enterprise applications

An application’s scalability refers to its capacity to support an increasing number of users, clients, or customers. The consistency of the app’s data and the developer’s capacity to maintain it are directly correlated.

Application scalability provides the assurance that, over time, your program will be able to sustain a rapid increase in its user base. An app must be correctly configured in order to accomplish this. This indicates that the hardware and software are coordinated to handle a large volume of requests per minute (RPM).

Every time a user interacts with your app, a request is generated, thus it’s imperative to respond to each one. In order to achieve the best level of user interaction a boost in the processing power of a computer is needed, or even better, the engagement of a flexible web service provider. This makes it possible to hire only the amount of capacity you actually require, ensuring that your app’s performance is unaffected and that your users are satisfied (Kazmi, 2023).

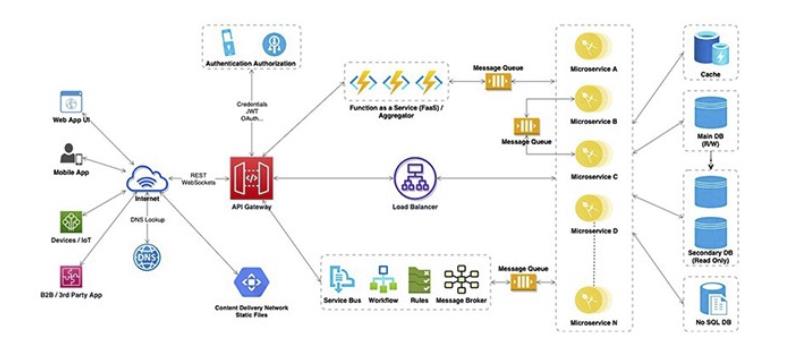

Scaling horizontally is possible with many servers. An essential component of a scalable backend is horizontal scaling. Using numerous servers to successfully serve the same purpose is known as horizontal scaling. Since each of these servers has access to the same logic and database, they may all do the same duties. Traffic is then divided among these servers using load balancers.

Load balancers are used in scalable server infrastructure to distribute requests over a number of servers rather than clogging up a single server with them. Serving as a go-between for the client (frontend) and servers, therefore a load balancer. By the use of load balancers, traffic will be routed to the proper servers in a way that increases server productivity and speeds up page loads.

As mentioned previously, scalability contributes to several benefits and can sometimes bring some negative aspects. Scalable applications are extremely useful as well as handy, and usually they have more pros than cons associated with, depending on how well they function. One of the most relevant positive aspect is certainly the user experience. Since scalability has impact on both front-end and back-end, it is important to give the right balance, in order to garantee functionality.

Another important aspect associated with scalability is cost-effectiveness. Since the cost is associated with the number of users, it is important, especially for start-ups to start with MVP, or minimum viable product, in order to gather the necessary data which will allow to gather enough feedback and optimize cost-effectiveness. However, as soon enough data is collected, it is needed to remove the MVP, otherwise performance will stay low, or in the worst case scenario, decrease (Chandra, 2021).

Apps scalability is also quite important to mantain performance stability, which will remain positive depsite the high user traffic. It will also provide feature personalization based on the user experience and feedbacks, as well as helping to manage the number of users. It will therefore guarantee an optimazation of the app.

Image from: How to build Scalable and Robust Enterprise Web Application? Chandra, 2021

1.3 P2P systems

When two or more personal computers (PCs) connect to one another and share resources directly with one another rather than going via a separate server, a peer-to-peer (P2P) network is created. This straightforward connection can be used as ad hoc means of connecting PCs through USB in order to transfer files. A network that connects many gadgets in a small office using copper cable can also be a permanent infrastructure investment.

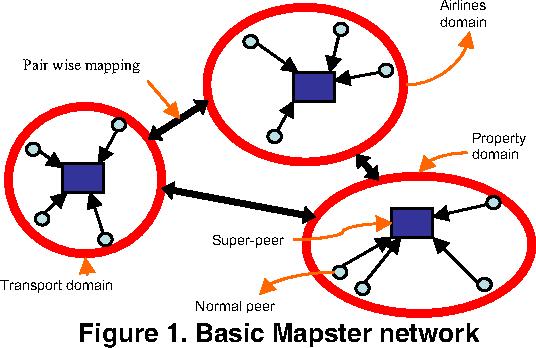

Machines in a P2P system are given symmetric roles so they can function as both clients and servers. This eliminates the requirement for any central component to keep up-to-date knowledge of the system globally. Instead, depending on local knowledge of the rest of the system, each peer makes independent judgments, enabling scalability by design (Kermarrec & Taiani, 2015).

Each peer in a P2P system can function as both a client and a server, but they only keep a localized perspective of the entire system. The scalability of P2P systems has been employed in the context of many other applications, including streaming, content delivery networks, broadcast, storage systems, and publish-subscribe systems, to mention a few. This paradigm has been widely used for (often illegal) file sharing applications.

P2P systems are scalable by design since each peer may function as a server in some circumstances. This avoids the bottleneck that most distributed systems have because the number of servers grows according to the number of clients. The fact that no entity is required to maintain a worldwide knowledge of the system, a costly and challenging activity in large-scale systems, complements this system’s inherent ability to grow. Instead, each peer’s conduct is only influenced by local and constrained knowledge. For starters, each peer only needs to analyze a limited amount of data, which guarantees scalability. Second, communication expenses are decreased because knowledge typically only needs to be shared with a small group of peers (Ibid).

However, as everything described on this paper, P2P networks do have pros and cons too. Among the pros, we need to surely mention that in a peer-to-peer network, information is distributed decentralized rather than through a central system. This implies that each terminal can operate independently of the others. The remainder of the network won’t be affected if one of the machines crashes for whatever reason. Another pro could be that a P2P network does not need a server because each terminal acts as a data storage location. Authorized users can use their assigned or personal devices to log onto any computer linked in this fashion. Moreover, due to the fact that everything takes place at the user terminal, peer to peer networks don’t require as many specialized personnel as other connections. There is no need to restart the downloading process from scratch if a stoppage happens for any reason, including the unexpected shutdown of your terminal. You can continue with the acquisition procedure once you can get your device back online or linked to the rest of the system (Galle, 2020).

Among the disadvantages there is the fact that a P2P network uses personal computers to store files and resources rather than a publicly accessible hub. This means that when a PC owner doesn’t appear to have a logical filing system, it may be difficult for some users to locate specific files. In addition to it when a peer-to-peer network is used, it is the responsibility of each individual user to avoid the introduction of malware, viruses, and other issues. Another disadvante that deserve attention is that each terminal can access every other device linked to it while users are operating in a peer-to-peer network. This implies that any moment, any user who is currently logged in to the system may access any computer (ibid).

Image from: A Scalable P2P Database System with Semi-Automated Schema Matching, Rouse, Berman, 2006.

1.4 Access Control System Servers

The access control database contains a sizable amount of digital data that has been indexed. It can be efficiently and cheaply searched, referred to, compared, altered, or otherwise handled. It is a logical organization of the data that contains all the hardware, operation, and record-based information for access control servers. This data is used by the software to give the user control and information in a natural language. The system can be configured by the user to behave in the way they want.

A server where the permissions are kept in an access control database is required for any access control system. It serves as the “brain” or “node” of the access control system as a result. By comparing the provided credential to the credentials approved for this door, the server determines if the doors should unlock. The access control server-based system keeps track of all access-related activity and events, logs them, and compiles reports on previous data events over a specified time frame. Consider a machine that hosts an access control server locally. In that instance, the access control server-based software is normally executed on a dedicated workstation. The master device that transmits the access control database to all controller boards and programs it is the access control server computer (Dicsan Technology, 2023)

Access control models are a crucial instrument created for protecting the data systems of today. Access control models are specifically used by institutions to manage the entire process by defining who their personnel are, what they can accomplish, which resources they can access, and which operations they may carry out. Institutions using distributed database systems will find this to be a very challenging and expensive task. The conditions for defining users’ demands to access resources that are dispersed across multiple servers, each of which is tangentially connected to the others, the verification and authorization of those user demands, and the ability to track users’ actions cannot, however, be configured in an effective manner (Guclu, 2020).

Armoured viruses, ransomware, and cryptoLocker malware are some of the new threats that are destroying information systems and resources today. Even when every precaution is taken to shield the systems from these dangerous risks, attacks nonetheless occasionally succeed. Every event that compromises one of the three fundamental principles of information security—accessibility, confidentiality, or integrity—is considered a security breach. Some violations result from unintentional software or hardware failures, while others happen when the systems are purposefully made inaccessible and services are interrupted. Security breaches, whether accidental or intentional, have a negative impact on an institution’s productivity and dependability (Ibid).

1.5 Cloud Auto-Scaling

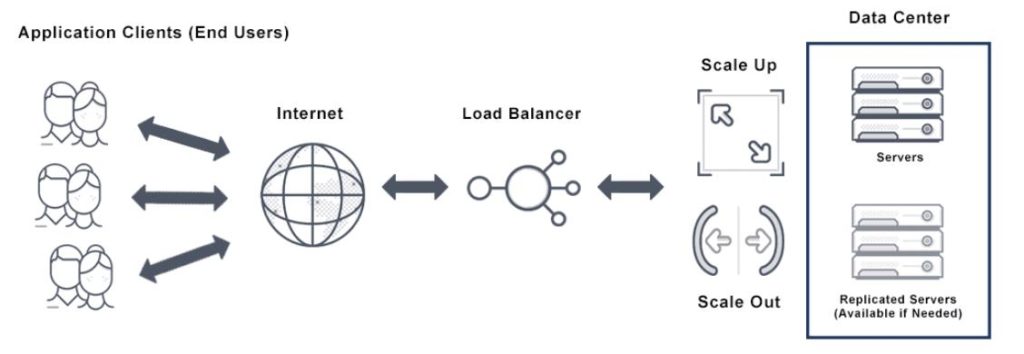

The process of automatically increasing or decreasing the computational resources given to a cloud task based on demand is known as autoscaling (sometimes spelled auto scaling or auto-scaling). When setup and managed correctly, autoscaling’s main advantage is that your workload will always receive exactly the amount of cloud computing resources that it needs, never more or less. You only pay for the server resources you use at the time of use.

A cloud computing technique for dynamically assigning processing resources is known as auto scaling. The number of servers that are active will normally change automatically as user needs change depending on the demand on a server farm or pool. Because an application often scales based on load balancing serving capacity, auto scaling and load balancing go hand in hand. In other words, the auto scaling policy is shaped by a number of parameters, including the load balancer’s serving capacity and CPU utilization from cloud monitoring (Avi Networks).

By effortlessly adding and removing additional instances in response to demand peaks and valleys, core autoscaling capabilities also provide lower cost, dependable performance. In light of this, autoscaling offers consistency despite the fluctuating and perhaps unforeseen demand for applications. The general advantage of autoscaling is that by automatically adjusting the active server count, it reduces the need to manually react in real-time to traffic surges that necessitate more resources and instances. The heart of autoscaling involves configuring, monitoring, and retiring each of these servers.

As everything described in this literature review, we will now take a quick look at what are the advantages and disadvantage on using auto-scaling cloud systems.

Among the advantages cost, security and availability certainly need attention. Both firms using their own infrastructure and those utilizing cloud infrastructure can put some servers to sleep when traffic levels are low thanks to auto scaling. In cases when water is utilized for cooling, this lowers both water and electricity expenses.By identifying and replacing unhealthy instances while maintaining application resiliency and availability, auto scaling additionally guards against failures of the application, hardware, and network. Finally as production workloads are less predictable, auto scaling enhances availability and uptime.

Among the disadvantages there are complex configuration, performance degradation and inconsistency. Before autoscaling can be used efficiently, a complicated configuration setup must be put in place. This entails establishing the scaling and monitoring guidelines as well as specifying the standards for scaling up or down. When new instances are started, autoscaling may result in performance reduction since these instances need time to establish and stabilize. (Birari, 2023)

Ultimately, autoscaling depends on monitoring and scaling criteria, which, if improperly designed, might result in uneven performance.

Image from: Autoscaling: Advantages and Disadvantages, Niteesha Birari, 2023

Conclusion

As explored in this literature review, in order to have a functional system, and more importantly a business, due to the fact the most of them today heavily rely on technology, scalability is extremely important. The size or type of business does not make any difference. In fact, through the examples examined in this paper, we can agree that scalability can work with different type of systems and therefore different type of goals can be accomplished.

On a technological level, scalability is essential in order to getting more storage, or having the ability to add on helpful software or a new operating system, allowing the system to be functional by decreasing the probability to crush.

On a business level, the ability to scale is a crucial feature of enterprise software. It may be prioritized from the beginning, which results in cheaper maintenance costs, improved user experience, and more agility. A company that is scalable can expand and make profit without being constrained by its organizational structure or a lack of resources. A corporation can maintain or improve its efficiency as its sales volume rises.

References:

Birari, N. (2023, February 8). Autoscaling: Advantages and Disadvantages – ESDS Official Knowledgebase. ESDS Official Knowledgebase. https://www.esds.co.in/kb/autoscaling-advantages-and-disadvantages/

C. (2021, September 2). How to build Scalable and Robust Enterprise Web Application? | Cashapona. https://www.cashapona.com/2021/09/02/how-to-build-scalable-and-robust-enterprise-web-application/

Fanchi, C. (2023, January 31). What Is App Scaling and Why It Matters. Backendless. https://backendless.com/what-is-app-scaling-and-why-it-matters/

Gaille, L. (2020, February 18). 20 Advantages and Disadvantages of a Peer-to-Peer Network. https://vittana.org/20-advantages-and-disadvantages-of-a-peer-to-peer-network

Garg, V. K. (n.d.). Concurrent and Distributed Computing in Java. O’Reilly Online Learning. https://www.oreilly.com/library/view/concurrent-and-distributed/9780471432302/?_gl=1*13u7fam*_ga*NjUzMTk4MDMxLjE2NzgyODMxODc.*_ga_092EL089CH*MTY3ODQ0MzI0MC4zLjEuMTY3ODQ0MzI3MC4zMC4wLjA.

Kermarrec, A., & Taïani, F. (2015). Want to scale in centralized systems? Think P2P. Journal of Internet Services and Applications, 6(1). https://doi.org/10.1186/s13174-015-0029-1

Peter, S. (n.d.). 6 Ways Scalability Requirements are Impacting Today’s Cyber Security. https://blog.cyberproof.com/blog/6-ways-scalability-requirements-are-impacting-todays-cybersecurity

Telecom, B., & Telecom, B. (2018, November 13). The Importance of Scalability with IT. Beacon Telecom. https://www.beacontelecom.com/the-importance-of-scalability-with-it/

Weinstock, C. B., & John B. Goodenough. (2006). On System scalability. apps.dtic.mil. https://apps.dtic.mil/sti/pdfs/ADA457003.pdf

What are distributed systems? A quick introduction. (n.d.). Educative: Interactive Courses for Software Developers. https://www.educative.io/blog/distributed-systems-considerations-tradeoffs

What is Auto Scaling? Definition & FAQs | Avi Networks. (2022, December 30). Avi Networks. https://avinetworks.com/glossary/auto-scaling/