This article is part of the On Tech newsletter. Here is a collection of past columns.

Many U.S. politicians and technologists believe that America would be better off if the government put more financial support into computer chips, which are like the brains or memory in everything from fighter jets to refrigerators. Proposals for tens of billions of dollars in taxpayer funding are working their way, somewhat unsteadily, through Congress.

One of the key stated goals is to make more computer chips in the United States. This is unusual in two ways: The U.S. is philosophically disinclined to help its favorite industries, and we often think about American innovation as disconnected from where stuff is physically made.

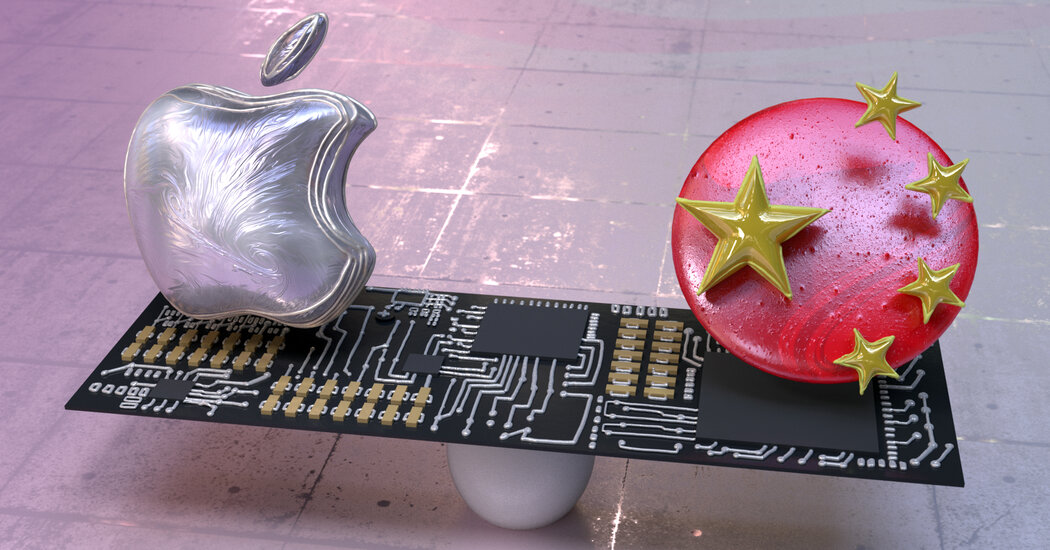

Most smartphones and computers are manufactured outside the U.S., but much of the brain power and value of those technologies are in this country. China has many of the factories, and we have Apple and Microsoft. That’s a great trade for the U.S.

Today I want to ask back-to-basics questions: What are we trying to achieve in making more chips on U.S. soil? And are policymakers and the tech industry pursuing the most effective steps to achieve those goals?

There are reasonable arguments that chips are not iPhones, and that it would be good for Americans if more chips were manufactured in the U.S., even if it took many years. (It would.) But in my conversations with tech and policy specialists, it’s also clear that supporters of government backing for the computer chip industry have scattershot ambitions.

Some experts say that more U.S.-made computer chips can protect the U.S. from China’s military or technology ambitions. Others want it to help clear manufacturing log jams for cars, or to help keep the U.S. on the cutting edge of scientific research. The military wants chips made in America to secure fighter jets and laser weapons.

That’s a lot of hope for government policy, and it might not all be realistic.

“There is a lack of precision in thought,” said Robert D. Atkinson, the president of the Information Technology and Innovation Foundation, a research group that supports U.S. government funding for essential technologies including computer chips. (The group gets funding from telecom and tech companies, including the U.S. computer chip giant Intel.)

Atkinson told me that he backed the proposals winding through Congress for government help for tech research and development, and for taxpayer subsidies for U.S. chip factories. But he also said that there was a risk of U.S. policy treating all domestic technology manufacturing as equally important. “Maybe it would be nice if we made more solar panels, but I don’t think that’s strategic,” he said.

Atkinson and people whom I spoke to in the computer chip industry say that there are important ways that computer chips are not like iPhones, and that it would be helpful if more were made on U.S. soil. About 12 percent of all chips are manufactured in the U.S.

In their view, manufacturing expertise is tied to tech innovation, and it’s important for America to keep sharp skills in computer chip manufacturing.

“We are one of the three nations on Earth that can do this,” Al Thompson, the head of U.S. government affairs for Intel, told me. “We don’t want to lose this capacity.” (South Korea and Taiwan are the other two countries with top-level chip manufacturing expertise.)

It’s tough to discern how important it is to make more chips in U.S. factories. I’m mindful that putting taxpayer money into chip plants that take years to start churning out products won’t fix pandemic-related chip shortages that made it tough to buy Ford F-150s and video game consoles.

Asian factories will also continue to dominate chip manufacturing no matter what the U.S. government does. If production in the U.S. increases to, say, 20 percent, future pandemics or a crisis in Taiwan could still leave the U.S. economy vulnerable to chip shortages.

What’s happening with computer chips is part of a broad question in both U.S. policy and our American mind-set: What should the U.S. do about a future in which technology is becoming less American? This is the future. We need policymakers to be asking where this matters, where it doesn’t, and where the government should focus its attention to keep the country strong.

Before we go …

-

“The goal is not to win, but to cause chaos and suspicion until there is no real truth.” My colleagues have a detailed investigation of China’s government providing pay and support for a crop of online personalities who portray cheery aspects of life as foreigners in the country, and hit back at criticisms of Beijing’s authoritarian governance and policies. (My colleague Paul Mozur has more in his Twitter thread.)

-

Facebook did an oopsie. The company now named Meta (Facebook), which is focused on a future version of the internet it calls the “metaverse,” recently blocked the nine-year-old Instagram account of an Australian artist with the handle @metaverse. After being contacted by The New York Times, the company said it was a mistake and restored it, Maddison Connaughton reported.

-

Old Man Yang can definitely beat me at Gran Turismo Sport: Sixth Tone, a Chinese tech publication, introduces us to older people in China, including one nicknamed Old Man Yang, who have fallen in love with video games and have won online fans for their skills. And The Wall Street Journal talked to teams of seniors in Japan who play competitive video games known as e-sports. (A subscription is required.)

Hugs to this

Here is a tale of the adventures of Cosmo, a crow in Oregon that loves to hang around people, and talk (and curse) at them. (Thanks to my colleague Adam Pasick for sharing this.)

We want to hear from you. Tell us what you think of this newsletter and what else you’d like us to explore. You can reach us at [email protected].

If you don’t already get this newsletter in your inbox, please sign up here. You can also read past On Tech columns.